The crawling bot of every search engine crawls our blog, following the Robots.txt and the crawling rules of our website. Therefore, the Robots.txt file plays a crucial role in search engine optimization and ranking the blog on Google. If your blog does not have a Robots.txt file, then the Google bot will not index your blog on Google. If you want to create an optimized Robots.txt file and add it to your Blogger blog, then you are on the right blog post.

In this article, I will tell you how to add an optimized Robots.txt file to a Blogger blog.

What is Custom Robots.txt?

The robots.txt file is also called the robots exclusion standard protocol. It is a text-based file that instructs search engine robots which pages of your blog to crawl and which pages not to crawl.

Why is Robots.txt file important for your Blogger blog?

The success of a blog depends on its rank on the Google search engine. The blog gradually ranks in the Google search engine only after being indexed by Google. The robots.txt file helps in indexing the blog pages on Google. Basically, it tells every search engine bot which pages of your blog to crawl and which pages not to.

If you do not add a robots.txt file to your blog, the Google bot will not crawl your blog. And if Googlebot does not crawl your blog, your blog will never be indexed on Google. That’s why you must add a custom Robots.txt file to your Blogger blog.

Best Optimized Robots.txt File for a Blogger blog

Blogger/Blogspot is Google’s free blogging service, so earlier, you had no control over your blog’s robots.txt. But now you can create and add a custom robots.txt for a Blogger blog. Robots.txt of Blogger/Blogspot blog looks like this:

User-Agent: *

Allow: /

Disallow: /search

Disallow: /label

Crawl-delay: 10

Sitemap: https://www.example.com/sitemap.xml

Note: Before adding the robots.txt file to your blog, submit your blog to Google Search Console.

Explanation of Custom Robots.txt file

There are many directives in a custom Robots.txt file. Here I will tell you the meaning of some basic directives. These are:

- Wildcards

- * means all

- / means root directory

- User-agent: This directive tells the web crawlers which search engine bot will crawl your blog.

- Allow: This directive instructs web crawlers to crawl specific files. [For example: Allow: /uploads (It means this directive tells the web crawlers to crawl all uploads like posts, pages, images, and everything I will upload on my blog)]

- Disallow: This directive tells the web crawlers not to crawl the particular files. [For example: Disallow: /search (It means this directive tells the web crawlers not to crawl the blog post searching)]

How to add custom Robots.txt file to Blogger

The robots.txt file is located at the root level of any website. Access to the root is not possible in Blogger. How do you edit this robots.txt file? You can access root files like robots.txt in Blogger’s Settings section.

Follow the steps below to add a custom robots.txt to a Blogger blog:

1. Log in to your Blogger account using your Gmail account.

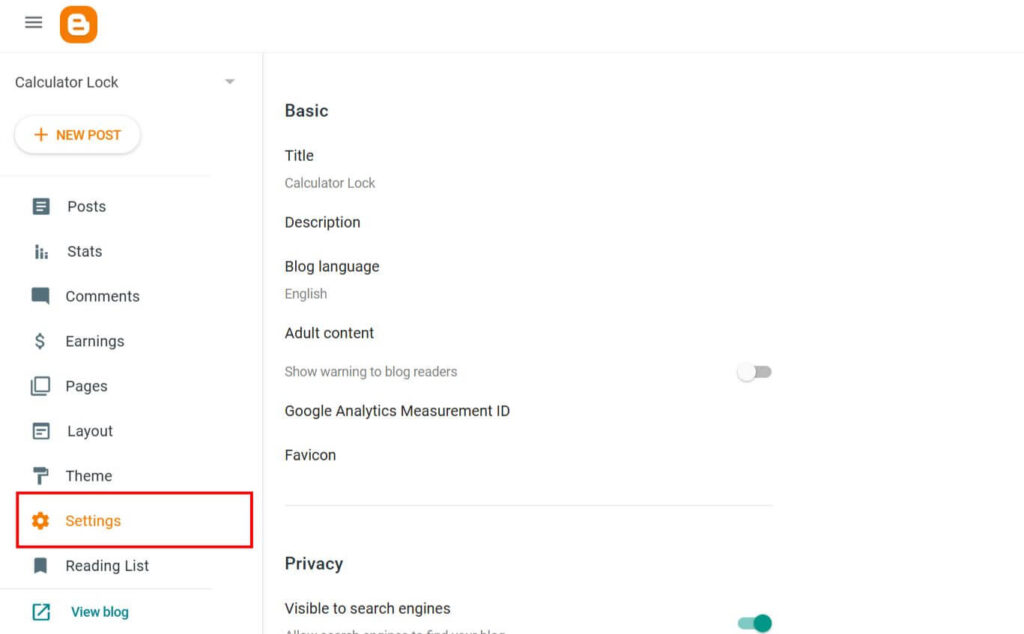

2. Click on Settings from the left sidebar.

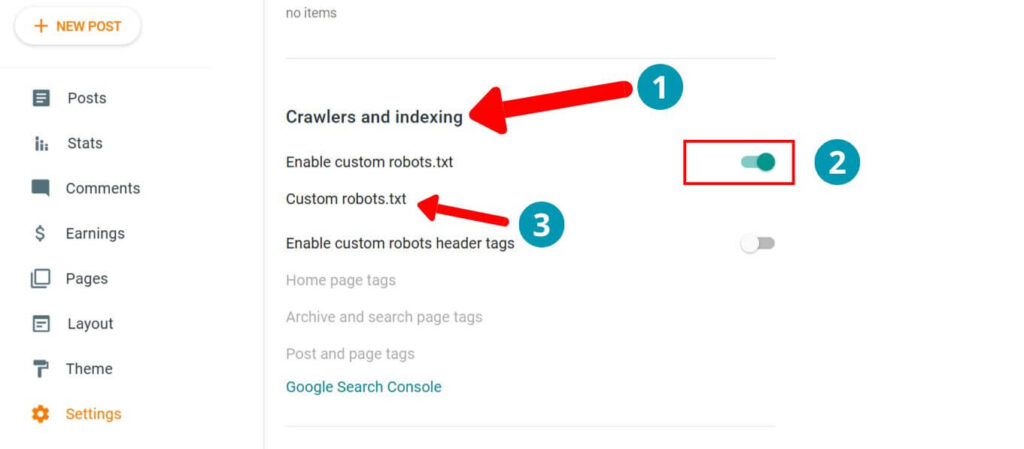

3. Scroll down to the Crawlers and Indexing section.

4. Enable the Custom robots.txt toggle button.

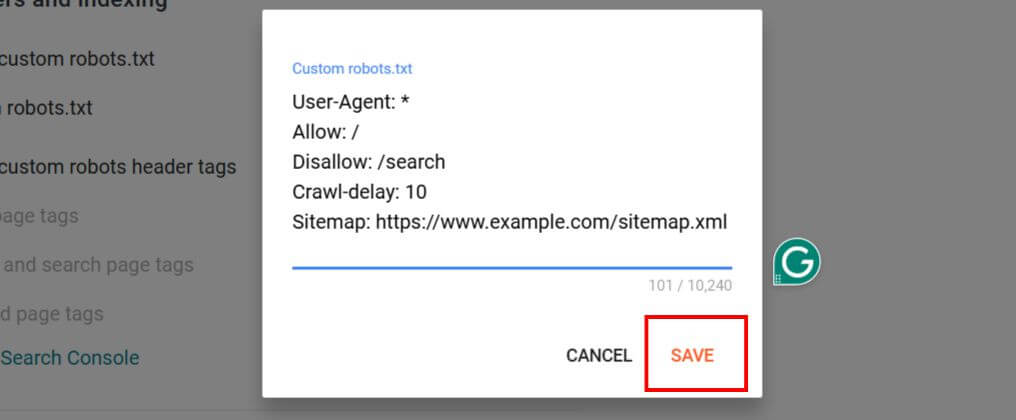

5. Click on Custom robots.txt; a window will open. Paste the above robots.txt code and click Save.

Conclusion

We’ve studied the functions of the robots.txt file and created an optimized custom robots.txt file for Blogger blogs. The default Robots.txt file also allows crawling of the archive section, which can cause search engines to encounter duplicate content issues. This confusion can cause Google to avoid selecting a particular page for search results.

Always remember, Google Search Console can report blocked pages, but it’s crucial to understand which pages are blocked and why. This custom Robots.txt will help you optimize your site for better SEO results.